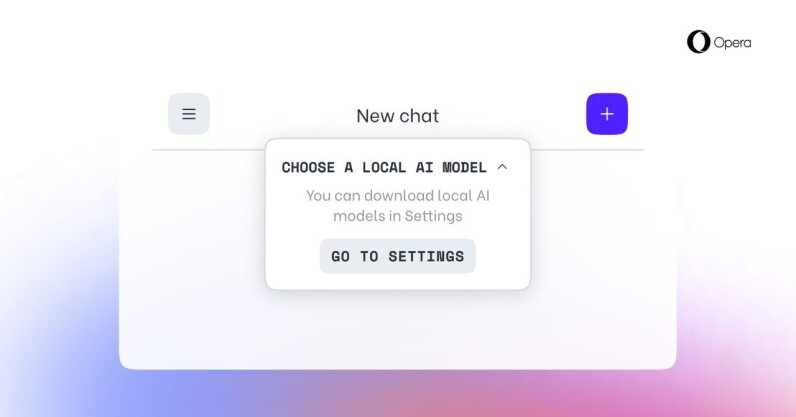

Opera now becomes the first major browser to offer built-in access to local AI models. Starting today, the Oslo-based company is introducing experimental support for 150 variants of local Large Language Models (LLMs), covering approximately 50 families. These include Mixtral from Mistral AI, Llama from Meta, Gemma from Google, and Vicuna. The introduction of built-in local LLMs come with a series of advantages, according to Jan Standal, VP at Opera. “Local LLMs allow you to process your prompts directly on your machine, without the need to send data to a server,” Standal told TNW. “Adding access to them in the…

This story continues at The Next Web